The Silent Scrimmage: Optimizing Instant Play WebGL Shooters for Low CPU Usage

In the thrilling, fast-paced world of gaming, few experiences are as alluring as the instant gratification of a WebGL shooter. No downloads, no installations – just click and play. It’s the digital equivalent of a high-octane arcade game, right in your browser. But beneath the surface of this seamless accessibility lies a complex challenge: how do you deliver a high-fidelity, responsive shooter experience without grinding the player’s CPU to a halt?

This isn’t just about making the game run; it’s about making it run well on a staggering variety of hardware, from aging laptops to powerful desktops, all while operating within the browser’s often demanding environment. As players, we demand fluid gunplay, crisp visuals, and zero lag. As developers, meeting that demand, especially on the CPU front, is the silent scrimmage that determines victory or defeat. So, grab your virtual wrench; we’re diving deep into the art and science of optimizing instant play WebGL shooters for minimal CPU strain.

The Unsung Hero (and Silent Killer): Why CPU Takes Center Stage

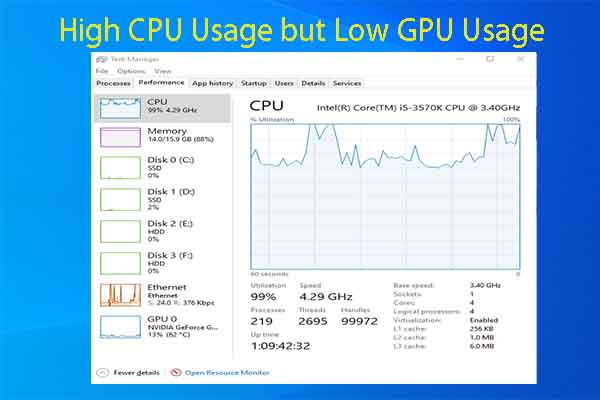

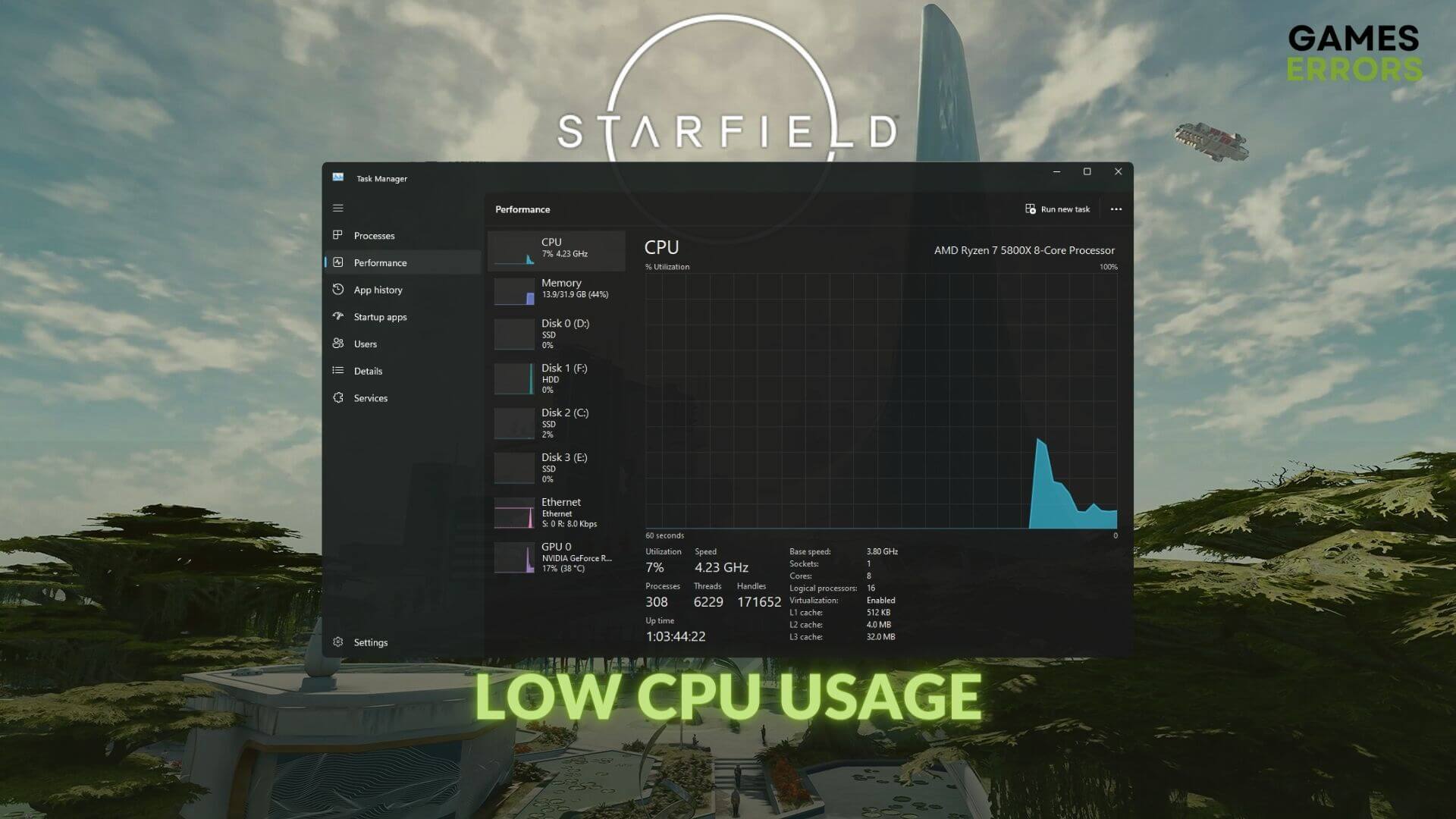

When we think about game performance, the GPU often gets all the glory, rendering stunning graphics at lightning speed. But it’s the CPU that’s the true architect, orchestrating every single action in your game. It handles game logic, physics calculations, AI decision-making, network communication, input processing, and crucially, prepares all the data and commands that the GPU needs to render a frame.

For WebGL games, the CPU’s burden is even heavier. JavaScript, while powerful, is single-threaded by default in the main browser thread. This means that your game logic, the browser’s UI updates, and various background browser processes are all competing for the same slice of CPU time. Add to that the overhead of the browser sandbox itself, frequent garbage collection cycles, and the inherent inefficiencies of interpreted code, and you have a perfect storm for CPU bottlenecks. An instant play shooter, by its very nature, demands near-constant, rapid updates to deliver that responsive feel. If the CPU can’t keep up, the result is stuttering, input lag, and a frustrated player hitting the close button faster than they can say "respawn."

So, how do we turn this CPU-hungry beast into a lean, mean, instant-play machine?

First Shot: Profiling – Your Best Weapon

Before you even think about optimizing, you must profile. Guessing where your CPU bottlenecks are is like shooting in the dark; you might hit something, but it’s probably not the target. Browser developer tools (like Chrome’s "Performance" tab or Firefox’s "Performance" monitor) are your best friends here.

Run your game, record a few seconds of typical gameplay (especially during intense moments), and analyze the flame charts. Look for long-running functions, frequent garbage collection spikes, and areas where the main thread is consistently busy. Is it physics? Is it rendering setup? Is it AI updates? Once you identify the culprit, you can target your optimizations effectively. Don’t skip this step; it’s the difference between efficient problem-solving and endless, fruitless tweaking.

The Arsenal: Strategies for CPU Domination

Now, let’s load up on specific tactics to lighten that CPU load.

I. JavaScript & Engine Efficiency: The Core Foundation

Since JavaScript is our primary language, making it as efficient as possible is paramount.

- Object Pooling: This is a classic for a reason. Instead of constantly creating and destroying objects (bullets, particles, enemies that die and respawn), reuse them. When an object is "destroyed," return it to a pool of inactive objects. When you need a new one, grab it from the pool and reinitialize it. This drastically reduces the workload on the JavaScript garbage collector, a notorious CPU hog, ensuring smoother, uninterrupted gameplay.

- Efficient Data Structures & Algorithms: Choose the right tool for the job. Arrays are generally faster than objects for sequential data. Maps/Sets can be highly efficient for lookups. Avoid nested loops where possible, and always consider the complexity (Big O notation) of your algorithms, especially for operations that run every frame.

- Minimize DOM Manipulations: While a WebGL game primarily renders to a canvas, sometimes UI elements or debug overlays might interact with the DOM. Every DOM manipulation (creating, updating, or deleting elements) is a CPU-intensive operation that can force browser reflows and repaints. Minimize these and batch them where possible.

- Web Workers: Offloading the Heavy Lifting: This is a game-changer for WebGL. Web Workers allow you to run JavaScript in a separate thread, completely isolated from the main browser thread. This means you can offload heavy computational tasks – like complex AI pathfinding, procedural generation, or large-scale physics calculations – without freezing the UI or interrupting game logic. Communication between the main thread and workers happens via message passing, so design your worker tasks to be self-contained and minimize back-and-forth chatter.

- Batching Operations: Whenever you can, group similar operations together. Instead of updating 50 individual enemy states with 50 separate function calls, perhaps you can update them all within a single loop, minimizing function call overhead. This applies across many aspects, from rendering (see below) to logic updates.

II. Rendering (CPU’s Prep Work): Smart Scenography

While the GPU renders pixels, the CPU is responsible for preparing all the data for the GPU. Optimizing this preparation is crucial.

- Frustum Culling: Don’t send data to the GPU for objects that aren’t visible to the camera. The CPU determines which objects are within the camera’s view frustum (the pyramid-shaped volume of what the camera can "see"). This dramatically reduces the number of draw calls and vertex data the CPU has to process and send.

- Occlusion Culling: Take frustum culling a step further. If an object is behind another opaque object (even if it’s within the frustum), the CPU shouldn’t send it to the GPU. This is more complex to implement but can yield significant CPU savings in dense environments.

- Level of Detail (LOD): Objects far away don’t need the same geometric detail as objects up close. The CPU can dynamically swap out high-polygon models for simpler, lower-polygon versions based on their distance from the camera. This reduces the amount of vertex data the CPU has to manage and send to the GPU.

- Instancing: For rendering many identical objects (e.g., a field of grass, a squad of identical enemies, bullet casings), use GPU instancing. Instead of the CPU issuing a draw call for each object, it issues a single draw call and provides an array of transformation matrices (position, rotation, scale) for all instances. The GPU then renders all instances in one go, drastically reducing CPU-side draw call overhead.

- Static Batching: If you have many static objects that share the same material, the CPU can combine their meshes into one larger mesh. This again reduces the number of draw calls the CPU has to prepare, making rendering more efficient.

- Shadows: Shadows can be incredibly CPU-intensive.

- Baked Shadows: For static environments, pre-calculate and bake shadows into your textures or lightmaps. This is zero runtime CPU cost.

- Simpler Algorithms: If dynamic shadows are essential, consider simpler algorithms like cascaded shadow maps with fewer cascades, or limit shadows to only the most important dynamic objects. The CPU still has to manage the shadow map generation process.

- Particle Systems: While visually stunning, particle systems can be CPU hogs.

- Limit Particle Count: Be judicious with the number of particles.

- Simpler Shaders: Use lightweight shaders for particles.

- CPU-Side Culling: Only update and simulate particles that are within the view frustum or close to the player.

- Pre-warm: Pre-simulate particle systems that are about to become visible to avoid a sudden CPU spike.

III. Physics – Less is More

Physics simulations are inherently CPU-bound. Every collision detection, every force application, every integration step eats cycles.

- Simplify Collision Shapes: Don’t use mesh colliders unless absolutely necessary. Primitive shapes like spheres, capsules, and boxes are significantly cheaper for the CPU to process for collision detection. Combine multiple simple shapes to approximate complex geometry.

- Broadphase Optimization: Before performing detailed collision checks, use a broadphase algorithm (like spatial partitioning with octrees, quadtrees, or bounding volume hierarchies) to quickly filter out objects that are far apart and cannot possibly collide. This dramatically reduces the number of pairwise checks the CPU has to perform.

- Reduce Simulation Frequency: Not every object needs to be simulated at the full game framerate. Distant objects, or objects with less critical interactions, can have their physics updated less frequently (e.g., every 2nd or 3rd frame), saving CPU cycles.

- Sleep Inactive Rigidbodies: If a rigidbody isn’t moving or colliding with anything, put it to "sleep." This stops its simulation until it’s reactivated by a force or collision, saving significant CPU resources.

- Avoid Unnecessary Physics: Do purely cosmetic objects need full physics? A decorative rock might just need a simple collider for raycasts, not a full rigidbody simulation.

IV. AI – Smart, Not Strenuous

Artificial intelligence, especially for a large number of enemies, can be a major CPU drain.

- AI LOD (Level of Detail): Similar to rendering LOD, AI behavior can be simplified based on distance or relevance. Enemies far away might just have a simple "idle" or "patrol" state, updating infrequently. As they get closer, their AI can become more complex and responsive.

- Simplified Behaviors: Not every enemy needs a complex behavior tree. Many can operate effectively with simple state machines (e.g., "Patrol," "Chase," "Attack," "Flee").

- Group AI Decisions: Instead of every AI agent making independent pathfinding or targeting decisions every frame, group them. A squad leader might calculate a path, and others follow. Or, update a group’s awareness in a staggered fashion.

- Occlusion for AI: If an enemy is behind a wall and can’t see the player, does it need to run its full perception logic? Potentially reduce its update frequency or disable certain expensive behaviors until it’s unoccluded.

- Pre-calculated Pathfinding: For static environments, pre-calculate navigation meshes and paths. This shifts the CPU cost from runtime to asset generation. For dynamic elements, use simpler pathfinding algorithms (A* on a grid is often cheaper than complex navmesh agents).

V. Networking – Lean & Mean

For multiplayer shooters, networking can introduce significant CPU overhead, not just in sending/receiving data but in processing it.

- Delta Compression: Only send data that has changed. Instead of sending an object’s full state every update, send only the differences (deltas) from its previous state. This reduces data size and the CPU cycles required for serialization/deserialization.

- Client-Side Prediction & Reconciliation: To combat latency, clients can predict their own movements and actions. The CPU on the client processes this prediction, making the game feel responsive. The server later sends authoritative updates, and the client reconciles any discrepancies, often at a lower frequency than the local prediction.

- Interpolation & Extrapolation: To smooth out movements received from the server, client-side CPU can interpolate between known positions or extrapolate short distances. This makes other players’ movements appear fluid, reducing the need for constant, high-frequency updates from the server, thus saving CPU on both ends.

- Relevance-Based Updates: Don’t send updates for every object to every client. Clients only need updates for objects that are relevant to them (e.g., nearby enemies, teammates in view). The server’s CPU filters this data, and the client’s CPU only processes necessary information.

VI. Memory Management – A Tidy House

While not a direct CPU metric, poor memory management can lead to frequent garbage collection, which is a major CPU hog.

- Object Pooling (revisited): As mentioned, this is key to reducing GC.

- Avoid Memory Leaks: Ensure you’re properly disposing of event listeners, removing objects from arrays/maps when they’re no longer needed, and clearing timers/intervals. Leaks lead to ever-increasing memory usage and more frequent, longer GC pauses.

- Smart Asset Loading: Don’t load all your assets at once. Load them progressively as needed, or during natural pauses (e.g., level transitions). This spreads out the CPU cost of asset decoding and processing.

Beyond the Code: Holistic Optimization

Optimizing isn’t just about tweaking algorithms; it’s also about user experience and smart design.

- Frame Rate Capping: Often, running your game at an uncapped 200+ FPS on a high-end machine is wasteful. Cap the frame rate (e.g., to 60 FPS or 30 FPS for lower-end machines). This tells the CPU, "You don’t need to work harder than this," freeing up cycles for other tasks or simply allowing the CPU to idle more.

- Player-Adjustable Quality Settings: Empower players! Offer options to reduce resolution, turn off shadows, lower particle counts, or simplify post-processing effects. This allows players with less powerful CPUs to dial down the settings for a smoother experience, while high-end users can crank it up.

- Progressive Loading & Streaming: For larger games, stream assets as the player moves through the environment. The CPU loads and decodes smaller chunks of data more frequently, rather than being slammed with a massive load operation at the start.

- Thorough Cross-Platform Testing: What runs smoothly on your powerful development machine might crawl on a budget laptop. Test your game on a variety of hardware configurations, especially older integrated GPUs and less powerful CPUs, to identify real-world bottlenecks.

The Instant Payoff: Why It All Matters

The pursuit of low CPU usage in an instant play WebGL shooter isn’t just a technical challenge; it’s a strategic imperative. A game that runs smoothly on a wide range of devices translates directly to:

- Wider Audience Reach: More players can enjoy your game, regardless of their hardware budget.

- Enhanced Player Satisfaction: A lag-free, responsive experience keeps players engaged and coming back for more.

- Reduced Drop-off Rates: Fewer players will abandon your game due to poor performance.

- Competitive Edge: In a crowded market, performance can be a key differentiator.

In essence, optimizing for low CPU usage isn’t about compromising quality; it’s about intelligent design, efficient engineering, and a deep understanding of the browser environment. It’s about ensuring that the promise of "instant play" truly delivers an instant, enjoyable, and high-performance shootout for everyone.

The silent scrimmage for CPU cycles is an ongoing battle, but with the right tools, strategies, and a relentless focus on efficiency, you can ensure your WebGL shooter not only gets played instantly but plays beautifully, every single time. Now go forth and optimize!